Greetings everyone, I wanted to ask something regarding the circuit that I am doing for my Final Year Project

So after setting up the circuit and the sensor, it was found out that the output generated by the ACS712 is 0.1mV (through Calculation). The reason why the output signal is so low is that because the current going through the circuit is low. Other than that, the microcontroller that I am using to receive the analog signal is Arduino Mega. As the resolution for Arduino mega ADC is10-bit. Thus the smallest value for the ADC is 4.88mV. Thus, I wanted to use an amplifier to amplify the Analog signal generated from the ACS712.

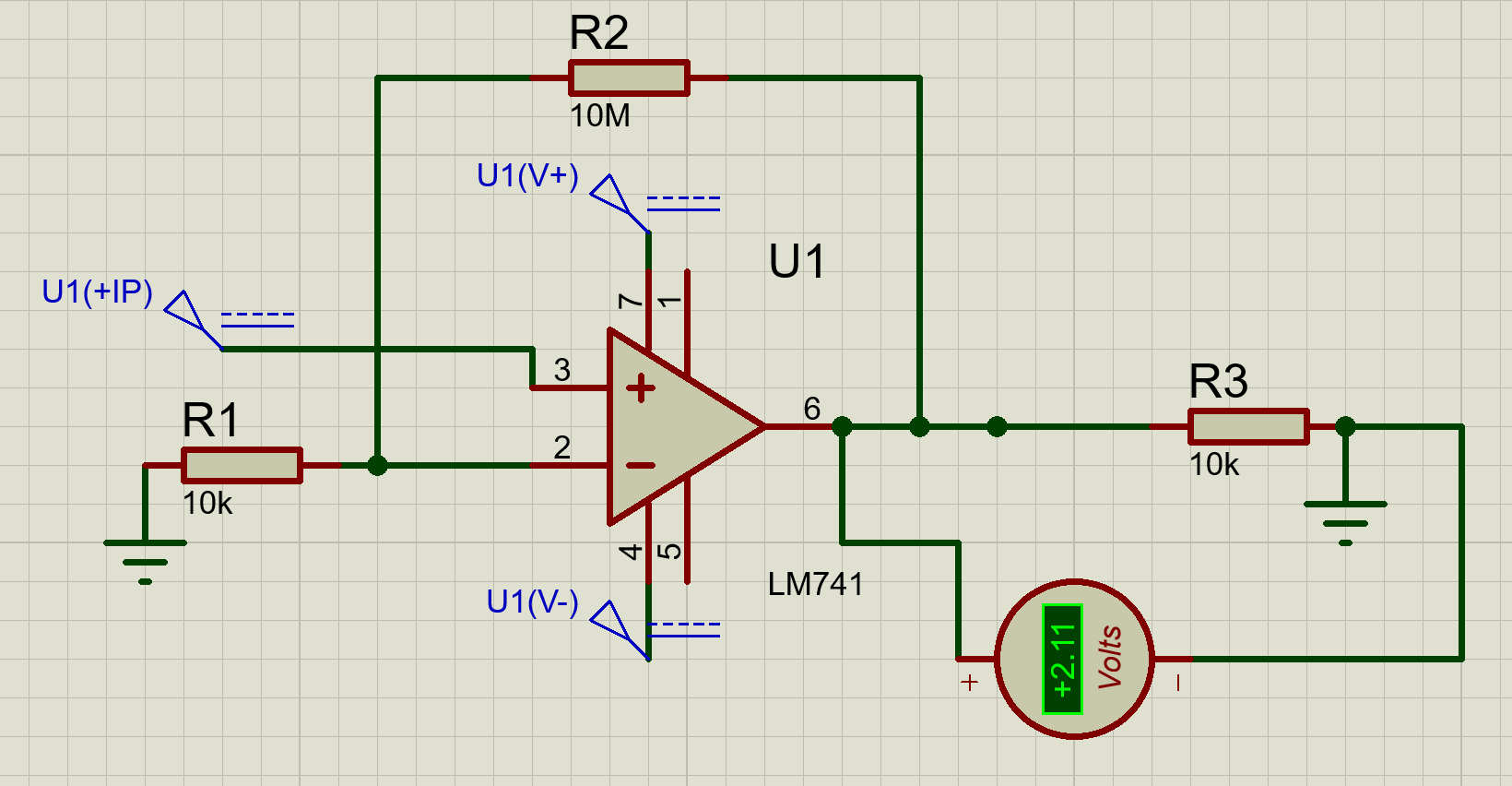

The circuit below shows the setup for the Amplifier (The amplifier used is LM741).

From the calculations using the formula (1+R2/R1), the gain is 1000. but when I run the simulation, the output voltage from pin 6 is 2.11V rather than the 0.1V (1000x0.1mV)? May I ask why is it like this, and is there any better way/Op-amp that I can use to amplify the 0.1mV input to a readable 0.1V? Or am I doing this entirely wrong?

So after setting up the circuit and the sensor, it was found out that the output generated by the ACS712 is 0.1mV (through Calculation). The reason why the output signal is so low is that because the current going through the circuit is low. Other than that, the microcontroller that I am using to receive the analog signal is Arduino Mega. As the resolution for Arduino mega ADC is10-bit. Thus the smallest value for the ADC is 4.88mV. Thus, I wanted to use an amplifier to amplify the Analog signal generated from the ACS712.

The circuit below shows the setup for the Amplifier (The amplifier used is LM741).

From the calculations using the formula (1+R2/R1), the gain is 1000. but when I run the simulation, the output voltage from pin 6 is 2.11V rather than the 0.1V (1000x0.1mV)? May I ask why is it like this, and is there any better way/Op-amp that I can use to amplify the 0.1mV input to a readable 0.1V? Or am I doing this entirely wrong?